Day 1 & 2: Final Presentation

On the first day of this week, I worked on finalizing my presentation and shared it with my mentor. After receiving his feedback, I made changes and focused on rehearsing the final presentation that I had to present to the entire team of HPCC systems (Richard Chapman, Vijay Raghavan, Michael Gardner, Lorraine Chapman, David de Hilster) along with other interns of summer 2022. On Aug. 2nd, I presented about the expectations that were set in the beginning and amount of work accomplished during the nine weeks period. I am very happy that I received a positive feedback and appreciation from entire team and my mentor. Since the presentation could not be recorded due to technical issues in Lorraine’s network I will soon record my presentation and share it to my manager, Lorraine and mentor, David.

Day 3, 4 & 5: Transforming KB to XML

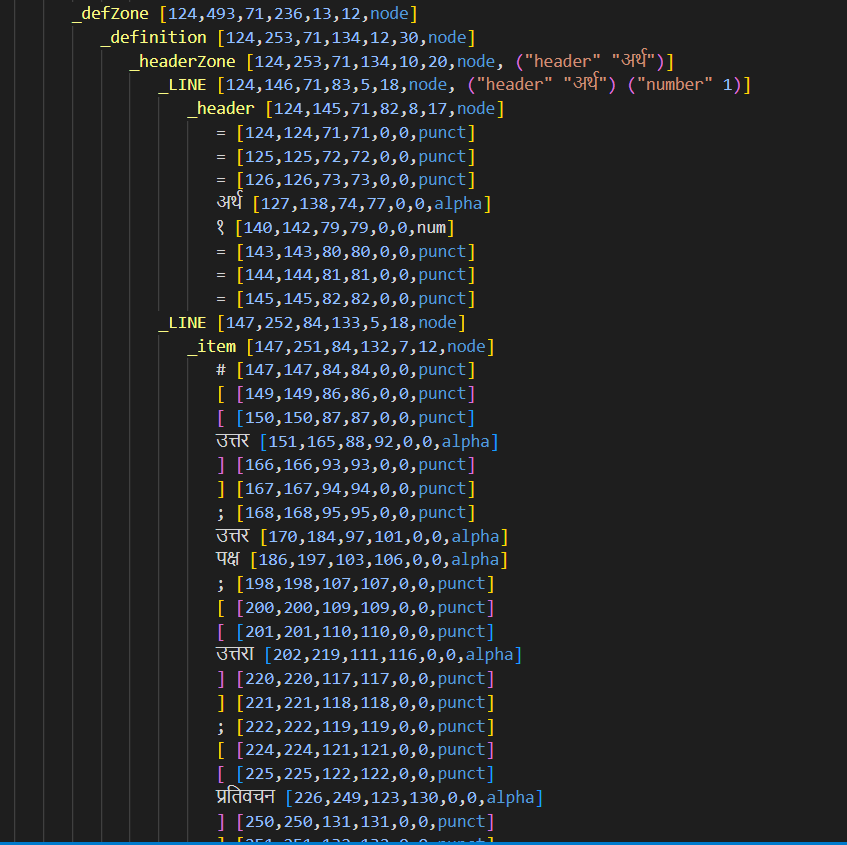

Though I already presented my work of this summer I still have three more weeks of work remaining during which I have to accomplish remaining expectations that was set in deliverables before starting the project. Therefore, I started working on writing code in NLP++ to transform knowledge base developed earlier to XML format using which XML file of each Wiktionary word entry can be formed. For that, since it failed to spray data file in the ECL Watch, I am now writing and displaying out in word.xml file. With the help of my mentor, I learned to transform the knowledge base to XML. While doing so, I learned to use While con, strstartswith, and strval function to create a while loop, condition if string starts with string name such as “pronunciation”, “definition”, “synonym”, etc., and get the string value of concept built in knowledge base respectively. The final output of XML file was created and was tested on all input word files. It is writing XML file correctly for each word content except for the case when there are more than phonetic/phonemic in the pronunciation record. For example, the final output of word ” ” in the XML format is as follows:

<word>

<wordid>1</wordid>

<word>जवाफ</word>

</word>

<pos>

<wordid>1</wordid>

<posid>1</posid>

<pos>नाम</pos>

</pos>

<definition>

<posid>1</posid>

<defid>1</defid>

</definition>

<explanation>

<defid>1</defid>

<expid>1</expid>

<text>उत्तर; उत्तर पक्ष; उत्तरा; प्रतिवचन</text>

</explanation>

<variation>

<defid>1</defid>

<varid>1</varid>

<text>उत्तर पक्ष</text>

</variation>

<variation>

<defid>1</defid>

<varid>2</varid>

<text>उत्तरा</text>

</variation>

<variation>

<defid>1</defid>

<varid>3</varid>

<text>प्रतिवचन</text>

</variation>

<example>

<defid>1</defid>

<exampid>1</exampid>

<text>श्यामले आफ्नो प्रियसीलाई छोडेर जाने कारण सोध्दा प्रियसीले केहि जवाफ दीनन।</text>

</example>

<pronunciation>

<wordid>1</wordid>

<phoneticid>1</phoneticid>

<pttext>javāpha</pttext>

</pronunciation>

<synonym>

<wordid>1</wordid>

<synid>1</synid>

<text>उत्तर</text>

</synonym>

<synonym>

<wordid>1</wordid>

<synid>2</synid>

<text>प्रतिक्रिया</text>

</synonym>

<term>

<wordid>1</wordid>

<termid>1</termid>

<text>जवाफदेही</text>

</term>

<term>

<wordid>1</wordid>

<termid>2</termid>

<text>जवाफमाग्नु</text>

</term>

<term>

<wordid>1</wordid>

<termid>3</termid>

<text>जवाफदिनु</text>

</term>

<language>

<wordid>1</wordid>

<langid>1</langid>

<text>अङ्ग्रेजी</text>

</language>

<translation>

<langid>1</langid>

<transid>1</transid>

<text>answer</text>

</translation>

<translation>

<langid>1</langid>

<transid>2</transid>

<text>reply</text>

</translation>

<translation>

<langid>1</langid>

<transid>3</transid>

<text>response</text>

</translation>

As shown above, I could able to transform the knowledge base of each word input file to the output in XML format.